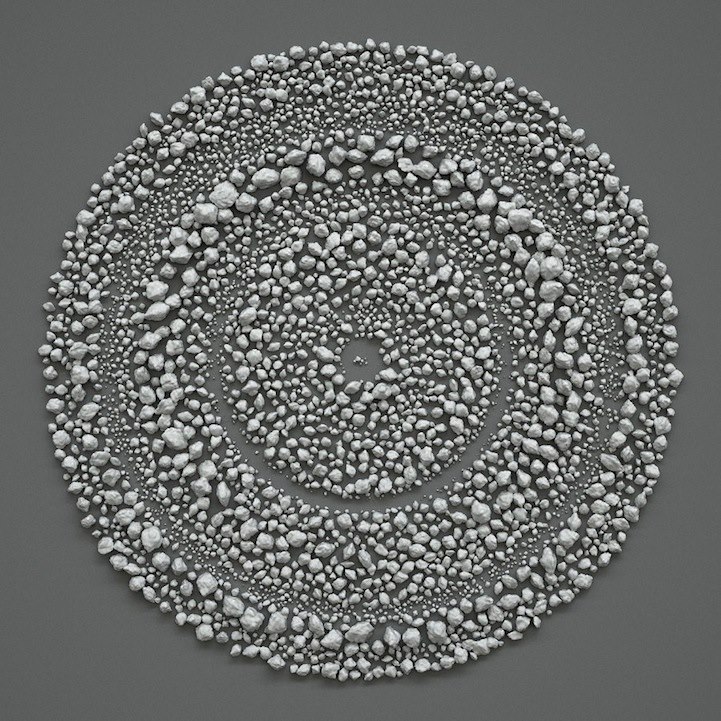

Rafael Lozano-Hemmer

Saturation Sampler

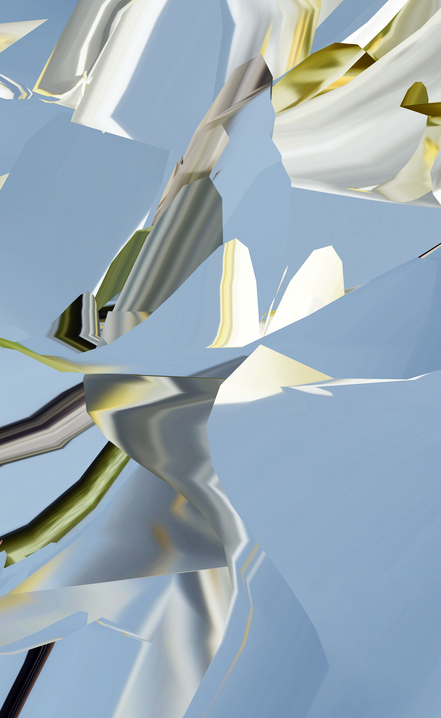

Rafael Lozano-Hemmer’s work Saturation Sampler, uses AI computer vision to track onlookers and extract the most saturated color palettes from their bodies and clothes, creating a gridded composition from the footage where viewers catch glimpses of their reflections in the pixelated field. With the widest color gamut available and an unparalleled 160-degree viewing angle, Luma Canvas delivers a unique viewing experience unlike any other. The direct emissive nature of the display’s LEDs creates a visceral and material encounter with Lozano-Hemmer’s interactive work, meaningfully situating his digital work within the physical realm.