QUBIT AI: Robert Seidel

HYSTERESIS

FILE 2024 | Aesthetic Synthetics

International Electronic Language Festival

Robert Seidel – HYSTERESIS – Germany

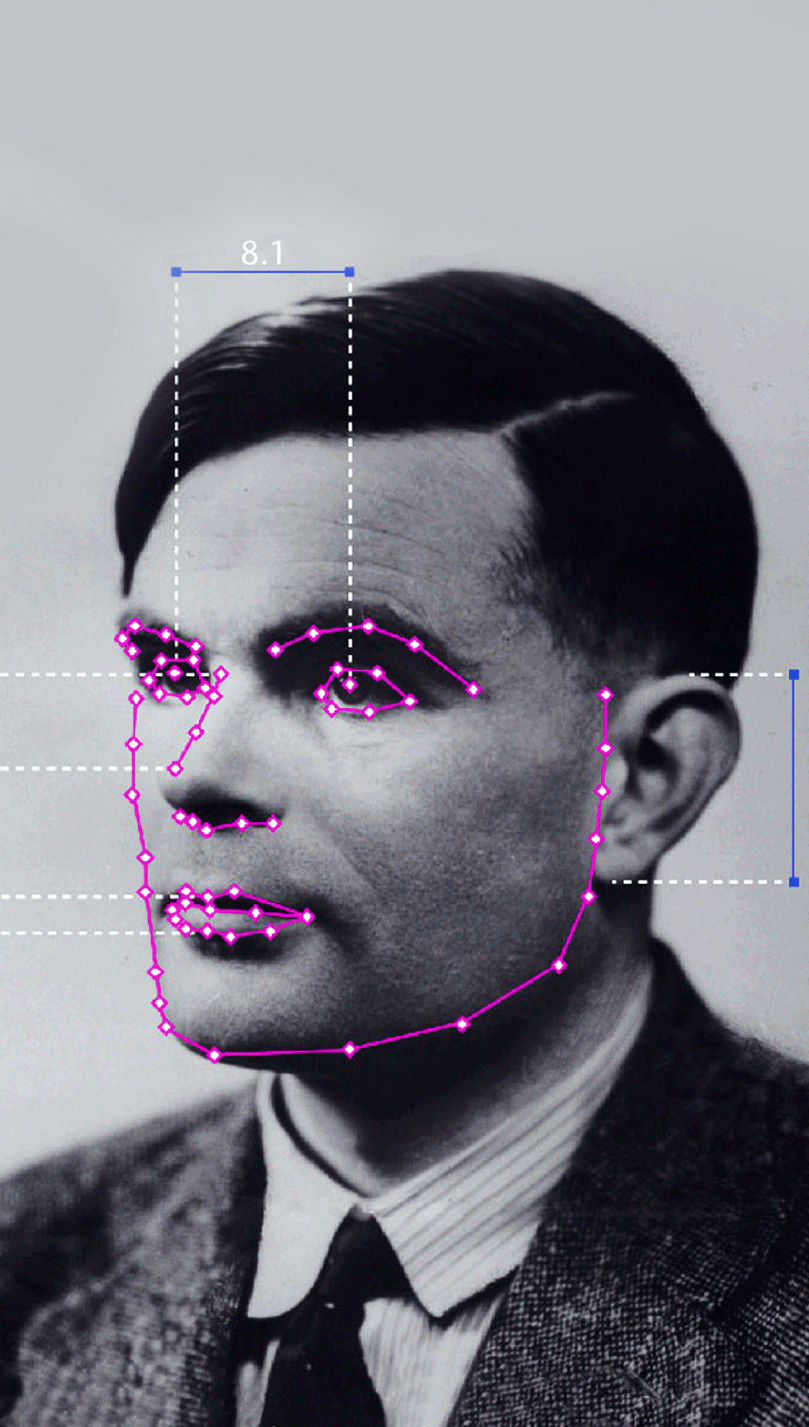

HYSTERESIS intimately weaves a transformative fabric between Robert Seidel’s projections of abstract drawings and queer performer Tsuki’s vigorous choreography. Using machine learning to mediate these delayed re-presentations, the film intentionally corrupts AI strategies to reveal a frenetic, delicate and extravagant visual language that portrays hysteria and hysteresis in this historical moment.

Bio

Robert Seidel is interested in exploring abstract beauty through cinematic techniques and insights from science and technology. His projections, installations and award-winning experimental films have been presented at numerous international festivals, art venues and museums, highlighting his innovative approach to visual art.

Credits

Film: Robert Seidel

Music: Oval

Performer: Tsuki

Graphics: Bureau Now

5.1 Mixing: David Kamp

Support: Miriam Eichner, Carolin Israel, Falk Müller, Paul Seidel

Financing: German Federal Film Board