QUBIT AI: Valentin Rye

Around The Milky Way

FILE 2024 | Aesthetic Synthetics

International Electronic Language Festival

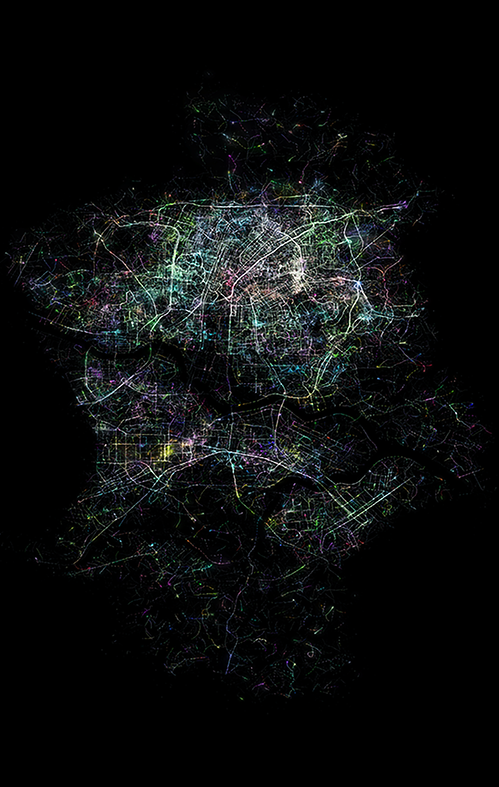

Valentin Rye – Around The Milky Way

In the distant future, several species inhabit the Milky Way. This scenario inspired the video, aiming to portray various forms of life and evoke a plausible atmosphere. Using images he created, the artist experimented with Stable Video Diffusion, a new video generation method. The objective was to expand the limits of this neural network and improve its output, resulting in the conception of this video.

Bio

Valentin Rye is a self-taught machine whisperer based in Copenhagen, deeply passionate about art, composition and the possibilities of technology. He has been involved in AI and neural network image manipulation since starting DeepDream in 2015. While his IT work lacks creativity, he indulges in digital arts during his free time, exploring graphics, web design, video, animation and experimentation with images.