Memo Akten and Katie Peyton Hofstadter

Embodied Simulation

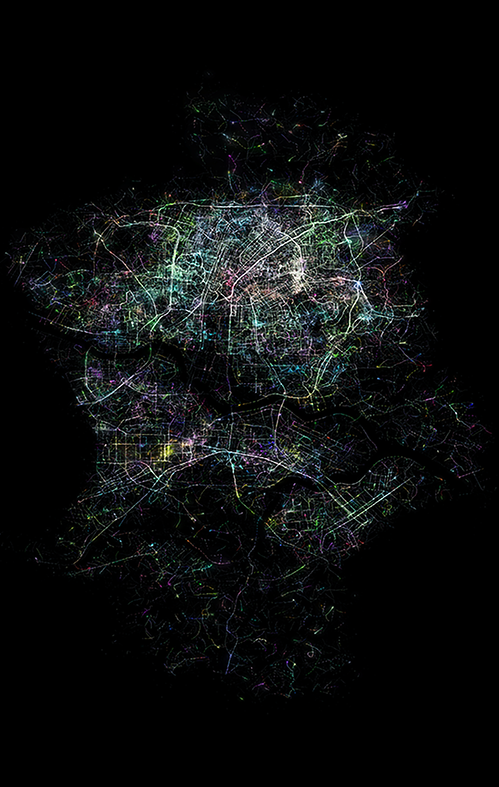

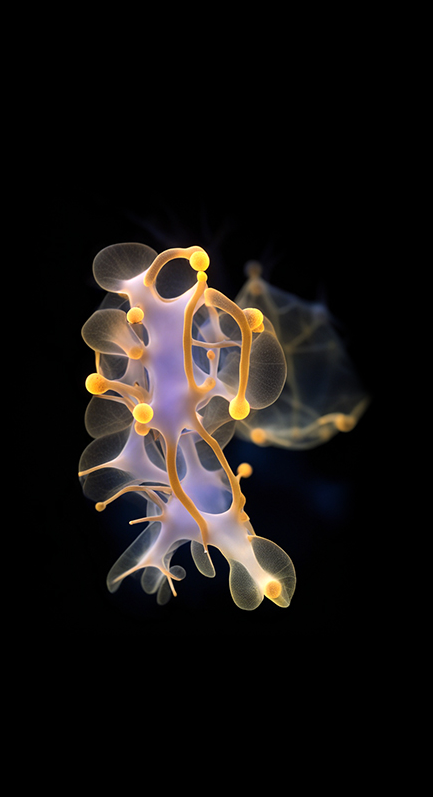

‘Embodied Simulation’ is a multiscreen video and sound installation that aims to provoke and nurture strong connections to the global ecosystems of which we are a part. The work combines artificial intelligence with dance and research from neuroscience to create an immersive, embodied experience, extending the viewer’s bodily perception beyond the skin, and into the environment.

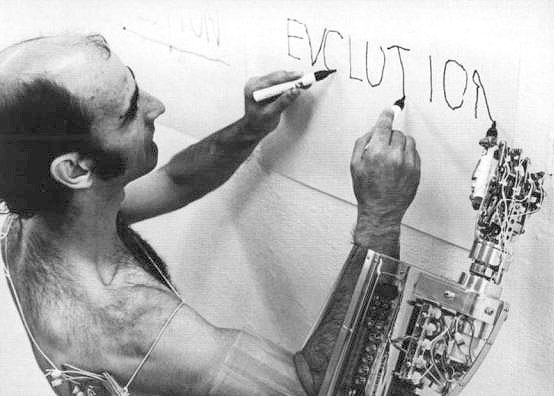

The cognitive phenomenon of embodied simulation (an evolved and refined version of ‘mirror neurons’ theory) refers to the way we feel and embody the movement of others, as if they are happening in our own bodies. The brain of an observer unconsciously mirrors the movements of others, all the way through to the planning and simulating execution of the movements in their own body. This can even be seen in situations such as embodying and ‘feeling’ the movement of fabric blowing in the wind. As Vittorio Gallese writes, “By means of a shared neural state realized in two different bodies that nevertheless obey to the same functional rules, the ‘objectual other’ becomes ‘another self’.”