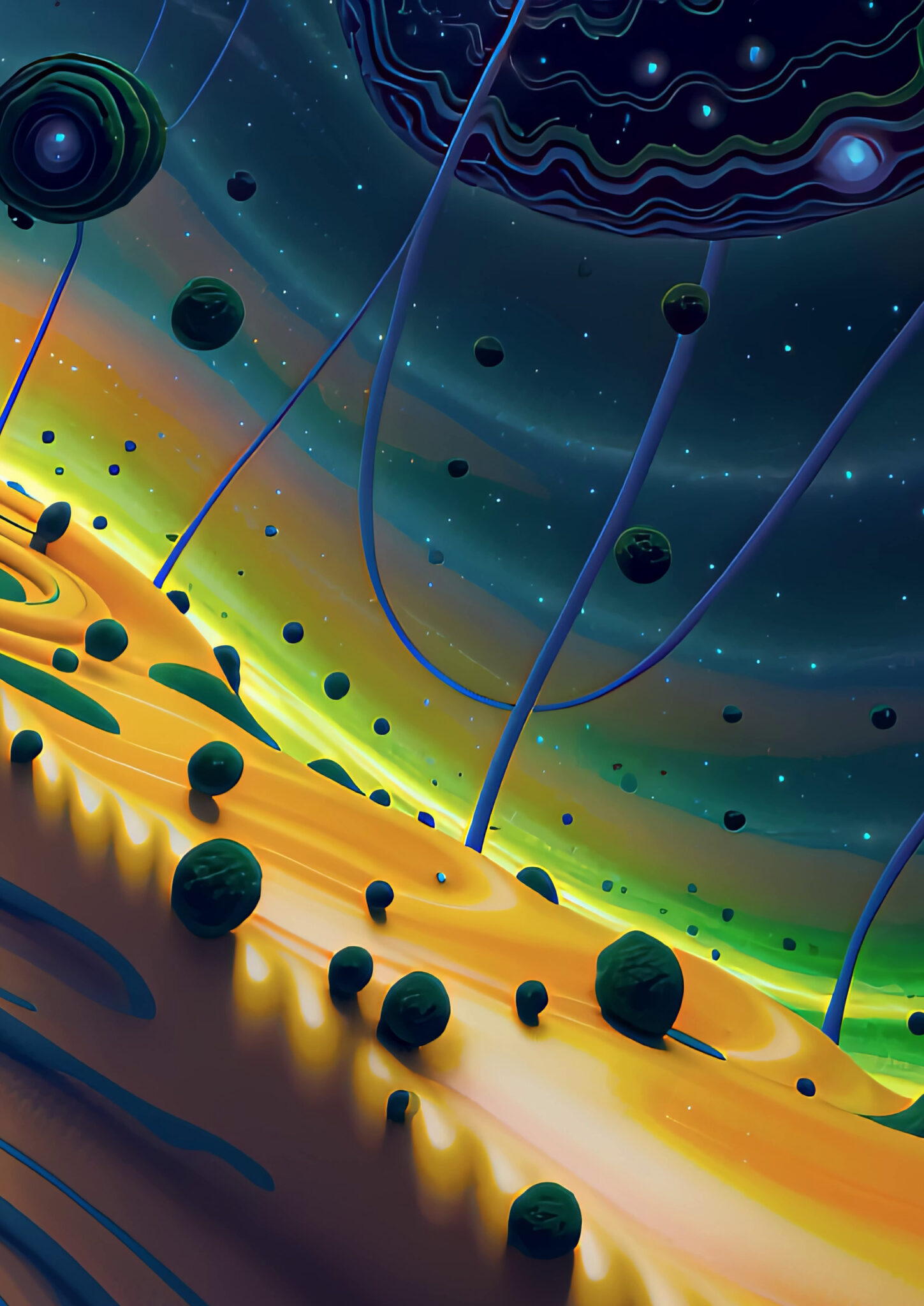

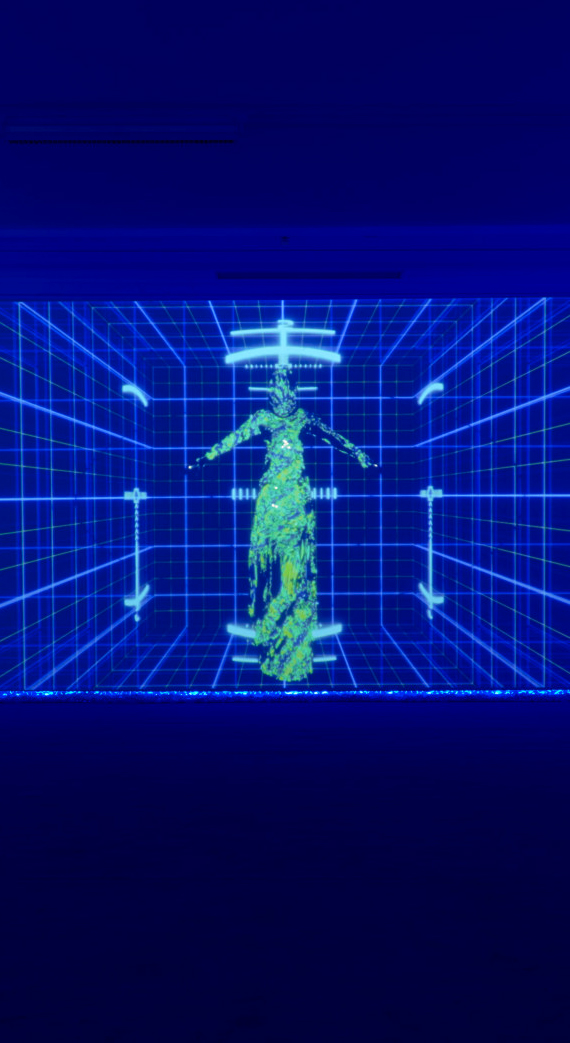

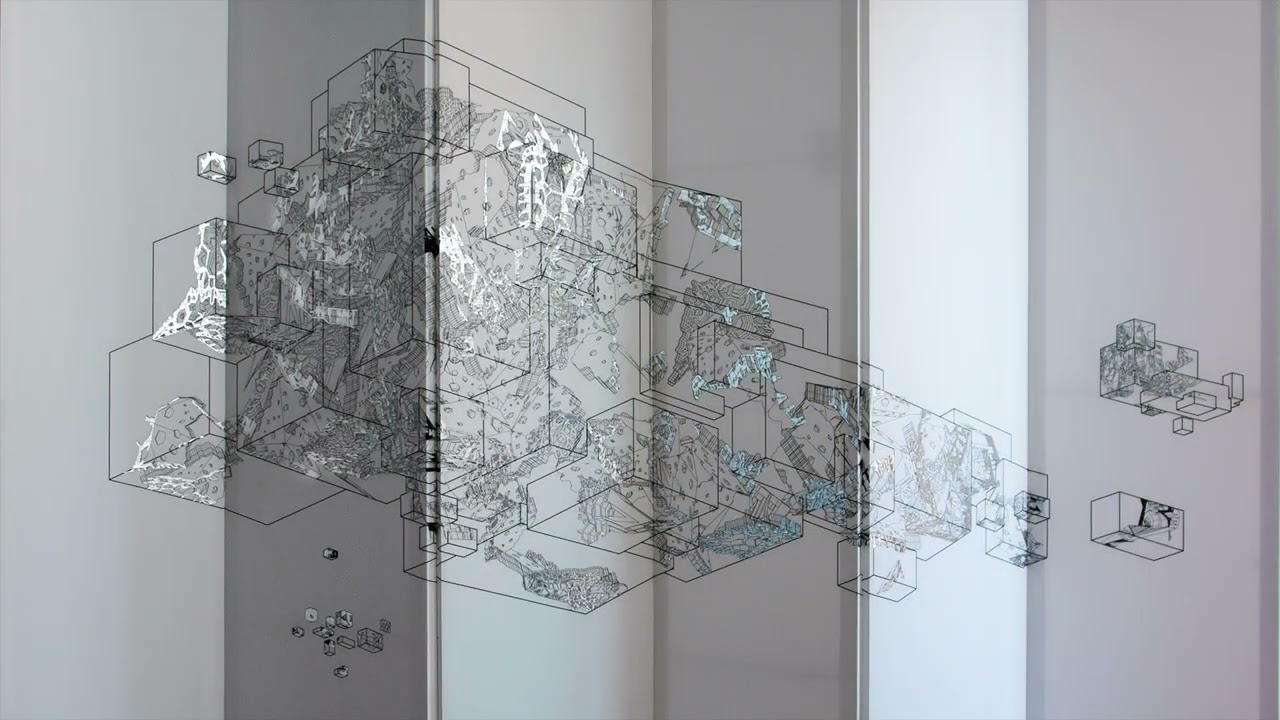

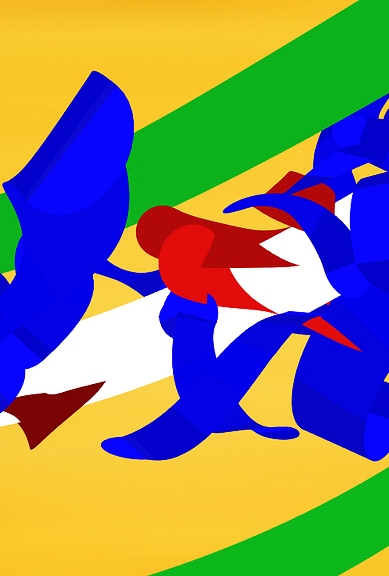

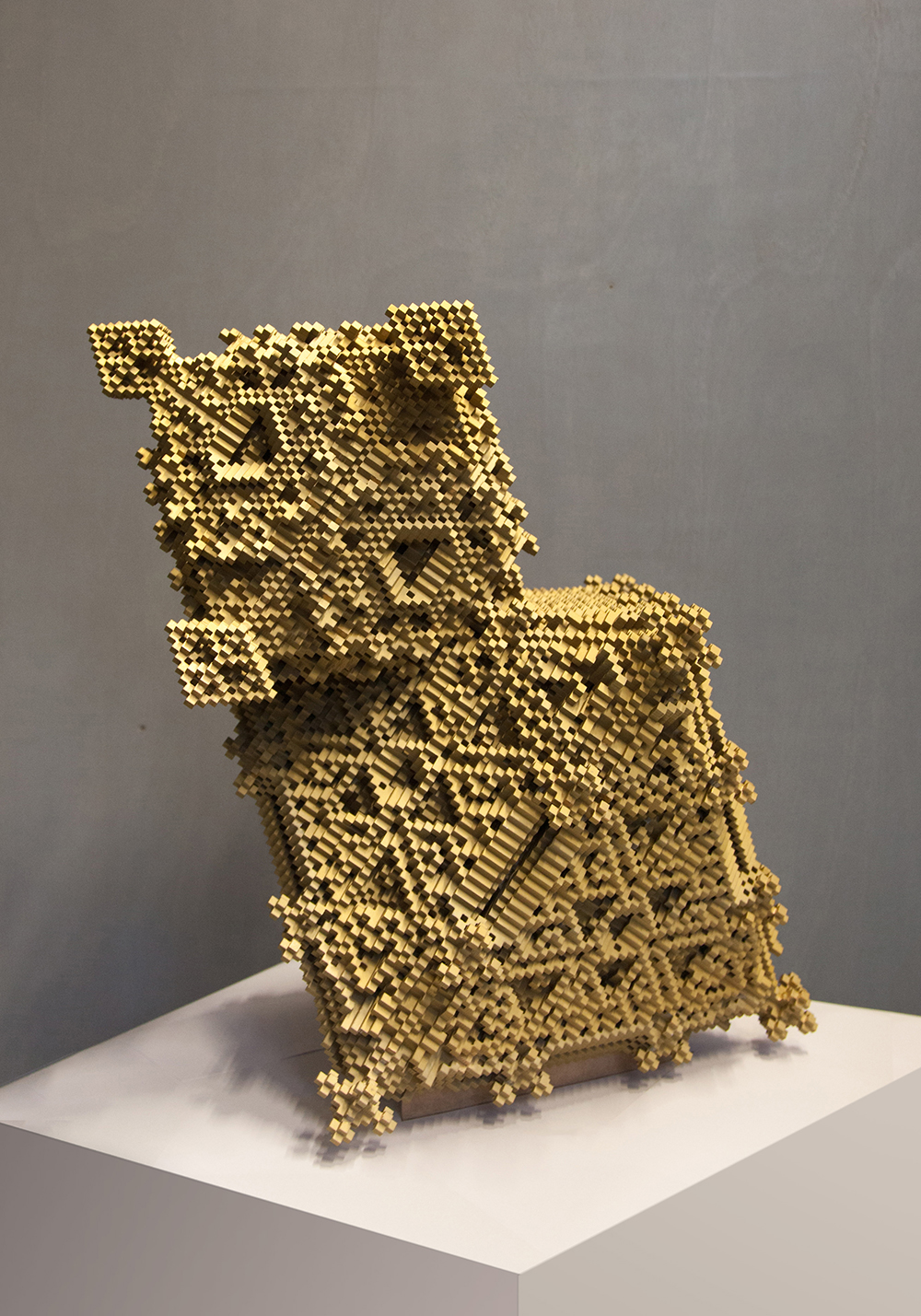

Synthetika: the age of artificial creativity | FILE 2025

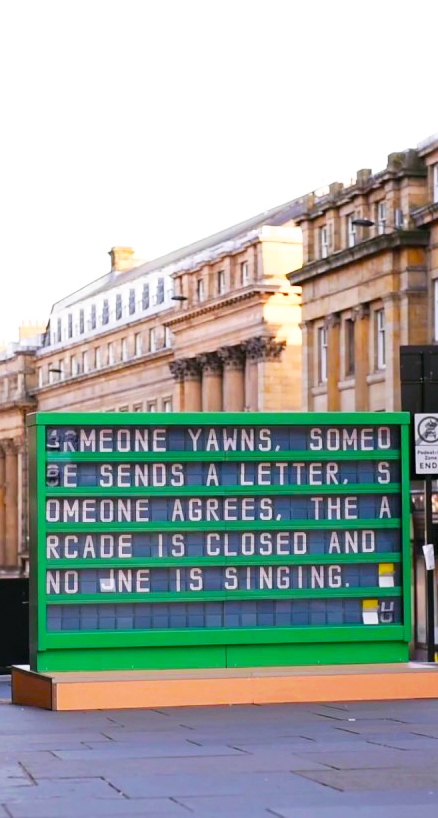

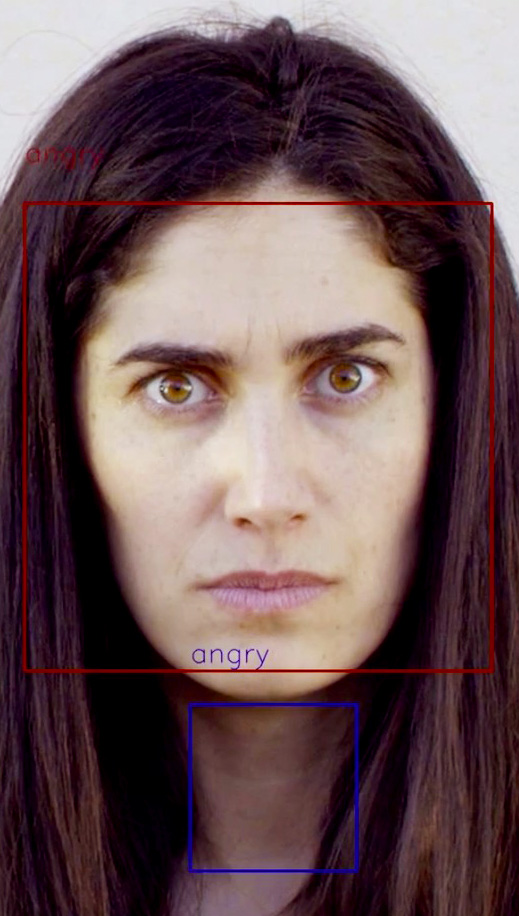

Unlike Hegel, who called the set of ideas of a given era the “spirit of the times” (Zeitgeist), we could call our era “Zeitsynthetik” (the time of the synthetic). In the classical period, art was inseparable from religion, whose essence was spirituality; in modernity, spirituality was replaced by the ideologies of grand narratives (capitalism and socialism). The classical arts invented poetics and aesthetics: the beautiful and the sublime. Modern art invented the avant-garde that proposed to be revolutionary, its driving force was the dialectic of the new without the old, and on the other hand, postmodernists mixed everything with everything, including the old with the new. Today we live in the era of synthetic technologies, the era of disruptive technologies. In which the new of modernity is no longer sufficient or surprising. Syntheticity is the new vector: synthetic algorithms; synthetic virtual realities; synthetic intelligences. The driving force behind synthetic art is: 1) the fusion of new art and technological innovation, and 2) the inter-creativity between the artist and artificial creativity. Prompt engineers strategically simulate personas for AIs in order to move away from triviality and thus obtain more creative results. Synthetic intelligences are no longer just instruments, but partners of artists in the construction of a creative and innovative symbiosis.

Art and culture are going through a moment in which creativity ceases to be just human, it becomes artificial; syntheticity thus prospects a post-culture, a new FORM: the form SYNTHETIKA.

Ricardo Barreto

Curator and co-founder of FILE